When AI thinks for students: The silent erosion of critical thinking in classrooms

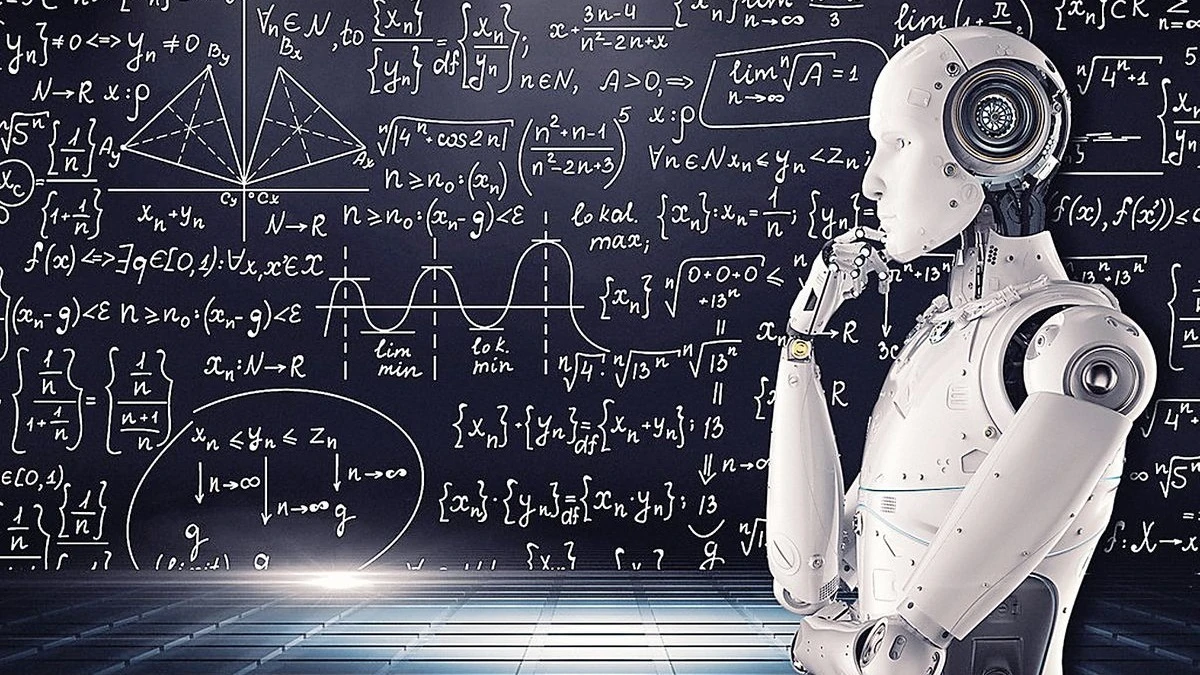

IN lecture halls from Dar es Salaam to Boston, something subtle but powerful is changing. You can’t always see it—there’s no loud disruption, no flickering screens. But it’s there. Students are leaning back a little more. Eyes scan less; fingers hesitate longer above the keyboard. And slowly, silently, artificial intelligence is beginning to think on their behalf.

At the Massachusetts Institute of Technology, researchers at the MIT Media Lab set out to understand what that shift means for student learning. Led by Professors Pattie Maes and Cynthia Breazeal—both global leaders in media technology and human-computer interaction—the study tracked how students’ brains reacted while using AI to write essays compared to doing the work unaided. They didn’t just analyze sentence structure or grammar.

They strapped EEG sensors to participants’ heads and examined the very electrical patterns that signaled engagement, focus and memory. What they found has sparked conversations far beyond the walls of MIT.

Students who leaned heavily on AI tools like ChatGPT and Deepseek were less cognitively engaged. Their brains lit up less, as if they were merely watching a screen rather than thinking through their arguments. Many couldn’t remember key quotes or ideas from their essays. One participant admitted, “It felt like the essay wasn’t even mine. I couldn’t tell you how I reached those points.”

That lack of ownership, researchers say, is a red flag. And the most striking moment came when roles were swapped: students who had written manually were now allowed to use AI, and vice versa. Those who moved from AI back to manual writing struggled far more than expected—thinking felt heavier, ideas slower to come. It was as if their cognitive muscles had atrophied, what the researchers began calling “cognitive debt.”

In Tanzania, where the push for digital transformation in education is accelerating, the study feels less like a warning from afar and more like a mirror. Across universities such as Tumaini University Makumira, the Open University of Tanzania, and the University of Dodoma, students are already grappling with the very questions MIT has raised.

A second-year student from the Open University of Tanzania (OUT0, speaking on condition of anonymity, described the convenience of AI during crunch time. “You just tell ChatGPT what you need, and it delivers. But the hard part comes when the lecturer asks you to defend your ideas in class. You realise you don’t remember half of what you submitted.”

Another student from Makumira University echoed that sentiment, confessing that using AI made him feel strangely passive. “You don’t go through the struggle. You paste, tweak a bit, and move on. But it’s like eating without chewing—you’re full, but not nourished.”

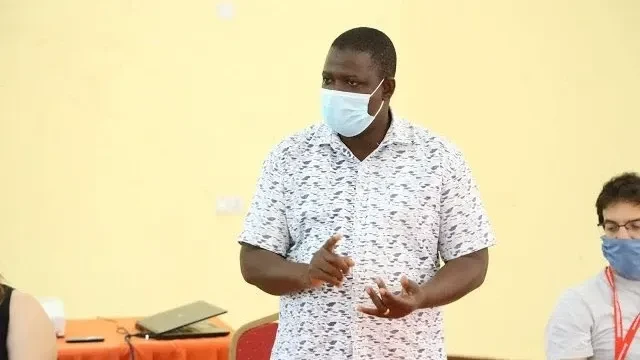

Lecturers have noticed the shift too. At the Institute of Accountancy in Arusha, Jovin John, who teaches Management in Public Administration, has had enough. “I hate AI in the classroom,” he said flatly. “It robs students of the intellectual struggle that builds character. Anyone can submit polished work now, but the depth is gone. I’d rather get a flawed but original idea than a clean paragraph that the student doesn’t even understand.” His concern isn’t just about cheating—it’s about erosion. Of thinking. Of discipline. Of effort.

Yet the truth is, students aren’t wrong for turning to AI. Many Tanzanian university assignments still follow rigid formats, rarely changing year to year, with prompts that invite repetition over reflection. “We’re asked to regurgitate answers,” said a third-year student at the University of Dodoma. “Not to think, but to remember. That’s why people take shortcuts. There’s no room for new ideas.”

The contradiction is stark: education systems that prize conformity are now colliding with tools designed for speed and originality. And somewhere in the middle, students are losing their grip on how to think.

Globally, educators are starting to reimagine what learning should look like in an AI-driven world. Prof Emeritus Alan Watson of the University of New South Wales doesn’t mince words. “AI doesn’t think—it predicts,” he said during a panel on education futures.

“When we let it dominate how students learn, we’re no longer teaching them to deal with ambiguity, contradiction, or failure. We’re training parrots, not problem-solvers.” Watson believes AI can play a valuable role in modern classrooms, but only if it’s framed correctly—not as a replacement for thought, but as a tool that challenges it. Ask students to critique an AI-generated response. Make them fight with it. That’s where real learning begins.”

That balance between using AI and being used by it is where many Tanzanian educators are beginning to find new ground. At Muhimbili University of Health and Allied Sciences, for instance, one lecturer gave students an unusual assignment: use ChatGPT to write a policy brief on maternal health, but then submit a critical reflection on what the AI got wrong or missed. “That made me think more than any other assignment,” a student recalled. “I realised AI sounded confident, but it lacked context. It didn’t understand local realities.”

It’s a glimpse of what responsible AI use might look like in education—not abandoning critical thinking, but sharpening it through confrontation. And it points to a wider need: not just for smarter students, but smarter systems. Systems that recognize when technology is a hammer and when it’s a scalpel.

Yet many Tanzanian institutions remain in limbo. There are no national guidelines on AI use in education, no curriculum revisions that account for generative tools. While some lecturers ban AI outright—often penalizing students caught using it—others quietly look the other way, unsure how to respond. The result is a patchwork of confusion, where students navigate a digital grey zone with little guidance.

Kusiluka Aginiwe of Ekima Interactive believes that limbo is dangerous. “Technology is like water,” he said. “You can’t stop it—you can only channel it. If we keep banning AI in schools while the world races ahead with it, we’re setting our students up to fail.”

For him, the answer lies not in fear, but in structure. He advocates for clear national frameworks that integrate AI literacy into both secondary and higher education, preparing students for the realities of a changing workforce. “Every major industry is adopting AI—from banking to agriculture. If our graduates can’t use these tools responsibly, they won’t be competitive.”

The MIT study doesn’t suggest abandoning AI. Rather, it points toward a crossroads. Used passively, AI dulls the very skills education aims to sharpen. But used with intention—within redesigned assignments that demand judgment, creativity, and reflection—it can deepen learning in ways we’re only beginning to understand.

What’s needed now is not just a policy change, but a cultural change. A redefinition of what it means to learn. A rejection of the idea that knowledge equals perfect answers and smooth essays. And an embrace of education as a process—a sometimes messy, always demanding process—that AI can support, but never substitute.

As Prof Watson warned, the danger isn’t that AI will take over thinking. The danger is that we will let it replace the illusion of thinking. And in doing so, forget the very purpose of education.

Tanzania, like many nations, now stands at that fork in the road. Ban AI, and risk irrelevance. Embrace it without reform, and risk intellectual decay. But choose a third path—one of guided integration, where students learn both with AI and despite it—and the future of learning could be more powerful than ever. The time to choose is now.

Top Headlines

© 2025 IPPMEDIA.COM. ALL RIGHTS RESERVED